Graph neural networks (GNNs) are promising device studying architectures designed to research information that may be represented as graphs. Those architectures completed very promising effects on quite a few real-world programs, together with drug discovery, social community design, and recommender programs.

As graph-structured information may also be extremely advanced, graph-based device studying architectures must be designed sparsely and successfully. As well as, those architectures must preferably be run on environment friendly {hardware} that toughen their computational calls for with out eating an excessive amount of energy.

Researchers at College of Hong Kong, the Chinese language Academy of Sciences, InnoHK Facilities and different institutes international not too long ago evolved a software-hardware gadget that mixes a GNN structure with a resistive reminiscence, a reminiscence answer that retail outlets information within the type of a resistive state. Their paper, revealed in Nature Device Intelligence, demonstrates the opportunity of new {hardware} answers according to resistive reminiscences for successfully working graph device studying ways.

“The potency of virtual computer systems is proscribed by means of the von-Neumann bottleneck and slowdown of Moore’s regulation,” Shaocong Wang, one of the vital researchers who performed the find out about, instructed Tech Xplore. “The previous is a results of the bodily separated reminiscence and processing devices that incurs broad calories and time overheads because of common and big information shuttling between those devices when working graph studying. The latter is as a result of transistor scaling is drawing near its bodily restrict within the generation of 3nm generation node.”

Resistive reminiscences are necessarily tunable resistors, which can be units that withstand the passage {of electrical} present. Those resistor-based reminiscence answers have proved to be very promising for working synthetic neural networks (ANNs). It’s because particular person resistive reminiscence cells can each retailer information and carry out computations, addressing the restrictions of the so-called Naumann bottleneck.

“Resistive reminiscences also are extremely scalable, preserving Moore’s regulation,” Wang stated. “However odd resistive reminiscences are nonetheless now not excellent sufficient for graph studying, as a result of graph studying regularly adjustments the resistance of resistive reminiscence, which results in a considerable amount of calories intake in comparison to the traditional virtual pc the usage of SRAM and DRAM. What is extra, the resistance alternate is incorrect, which hinders exact gradient updating and weight writing. Those shortcomings might defeat the benefits of resistive reminiscence for environment friendly graph studying.”

The important thing goal of the new paintings by means of Wang and his colleagues was once to conquer the restrictions of standard resistive reminiscence answers. To try this, they designed a resistive memory-based graph studying accelerator that removes the will for resistive reminiscence programming, whilst preserving a prime potency.

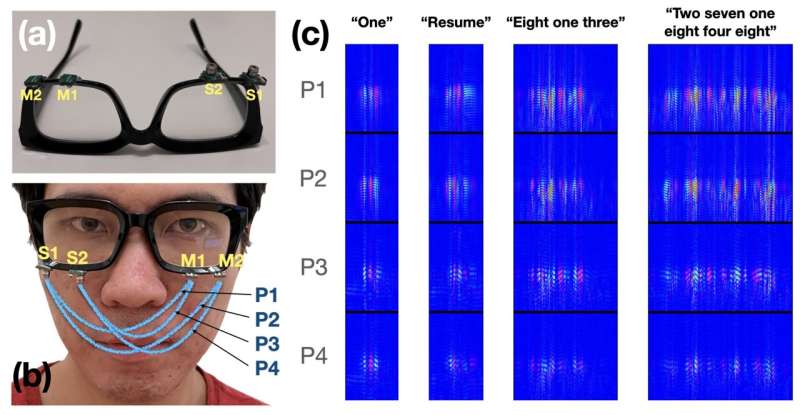

They particularly used echo state networks, a reservoir computing structure according to recurrent neural community with a moderately hooked up hidden layer. All these networks’ parameters (i.e., weights) may also be mounted random values. Which means they are able to permit resistive reminiscence to be right away appropriate, with out the will for programming.

“In our find out about, we experimentally verified this idea for graph studying, which is essential, and in reality, relatively basic,” Wang stated. “In truth, photographs and sequential information, similar to audios and texts, may also be represented as graphs. Even transformers, essentially the most state of the art and dominant deep studying fashions, may also be represented as graph neural networks.”

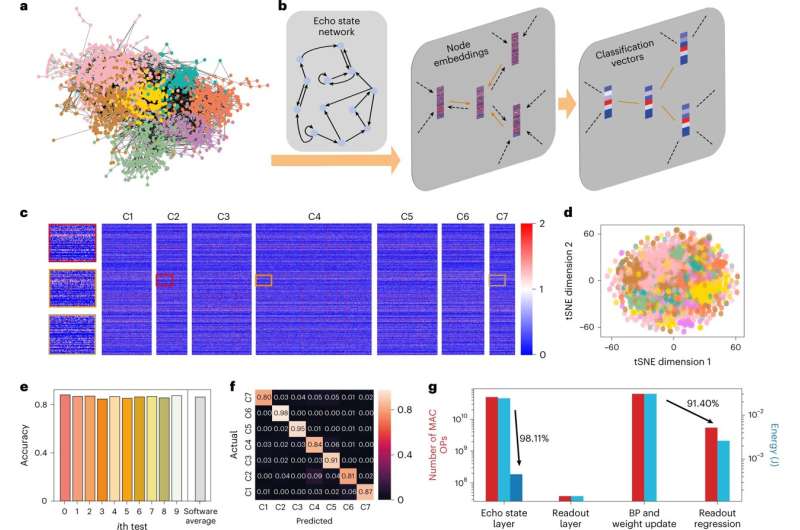

The echo state graph neural networks evolved by means of Wang and his colleagues are created from two distinct elements, referred to as the echo state and readout layer. The weights of the echo state layer are mounted and random, thus they do want to be time and again skilled or up to date through the years.

“The echo state layer purposes as a graph convolutional layer that updates the hidden state of all nodes within the graph recursively,” Wang stated. “Every node’s hidden state is up to date founded by itself function and the hidden states of its neighboring nodes within the earlier time step, each extracted with the echo state weights. This procedure is repeated 4 occasions, and the hidden states of all nodes are then summed right into a vector to constitute all of the graph, which is classed the usage of the readout layer. This procedure is repeated for 4 occasions, after which the hidden states of all nodes are summed in combination right into a vector, because the illustration of all of the graph, which is the labeled by means of the readout layer.”

The software-hardware design proposed by means of Wang and his colleagues has two notable benefits. Initially, the echo state neural community it’s according to calls for considerably much less coaching. Secondly, this neural community is successfully carried out on a random and stuck resistive reminiscence that doesn’t want to be programmed.

“Our find out about’s maximum notable fulfillment is the combination of random resistive reminiscence and echo state graph neural networks (ESGNN), which retain the energy-area potency spice up of in-memory computing whilst additionally using the intrinsic stochasticity of dielectric breakdown to offer cheap and nanoscale {hardware} randomization of ESGNN,” Wang stated. “Particularly, we advise a hardware-software co-optimization scheme for graph studying. This kind of codesign might encourage different downstream computing programs of resistive reminiscence.”

When it comes to utility, Wang and his colleagues presented a ESGNN created from numerous neurons with random and recurrent interconnections. This neural community employs iterative random projections to embed nodes and graph-based information. Those projections generate trajectories on the fringe of chaos, enabling environment friendly function extraction whilst getting rid of the hard coaching related to the improvement of standard graph neural networks.

“At the {hardware} facet, we leverage the intrinsic stochasticity of dielectric breakdown in resistive switching to bodily enforce the random projections in ESGNN,” Wang stated. “By way of biasing the entire resistive cells to the median in their breakdown voltages, some cells will enjoy dielectric breakdown if their breakdown voltages are less than the carried out voltage, forming random resistor arrays to constitute the enter and recursive matrix of the ESGNN. When compared with pseudo-random quantity era the usage of virtual programs, the supply of randomness here’s the stochastic redox reactions and ion migrations that stand up from the compositional inhomogeneity of resistive reminiscence cells, providing cheap and extremely scalable random resistor arrays for in-memory computing.”

In preliminary opinions, the gadget created by means of Wang and his colleagues completed promising effects, working ESGNNs extra successfully than each virtual and traditional resistive reminiscence answers. Someday, it may well be carried out to more than a few real-world issues that require the research of knowledge that may be represented as graphs.

Wang and his colleagues suppose that their software-hardware gadget may well be carried out to quite a lot of device studying issues, thus they now plan to proceed exploring its possible. For example, they need to assess its efficiency in series research duties, the place their echo state community carried out on memristive arrays may take away the will for programming, whilst making sure low energy intake and prime accuracy.

“The prototype demonstrated on this paintings was once examined on rather small datasets, and we goal to push its limits with extra advanced duties,” Wang added. “For example, the ESN can function a common graph encoder for function extraction, augmented with reminiscence to accomplish few-shot studying, making it helpful for edge programs. We sit up for exploring those chances and increasing the features of the ESN and memristive arrays someday.”

Additional info:

Shaocong Wang et al, Echo state graph neural networks with analogue random resistive reminiscence arrays, Nature Device Intelligence (2023). DOI: 10.1038/s42256-023-00609-5

© 2023 Science X Community

Quotation:

A gadget integrating echo state graph neural networks and analogue random resistive reminiscence arrays (2023, March 15)

retrieved 7 April 2023

from https://techxplore.com/information/2023-03-echo-state-graph-neural-networks-1.html

This report is matter to copyright. With the exception of any honest dealing for the aim of personal find out about or analysis, no

section could also be reproduced with out the written permission. The content material is equipped for info functions most effective.

Supply By way of https://techxplore.com/information/2023-03-echo-state-graph-neural-networks-1.html