As using system finding out (ML) algorithms continues to develop, laptop scientists international are continuously looking to determine and cope with tactics by which those algorithms may well be used maliciously or inappropriately. Because of their complex knowledge research features, if truth be told, ML approaches have the possible to allow 3rd events to entry non-public knowledge or perform cyberattacks temporarily and successfully.

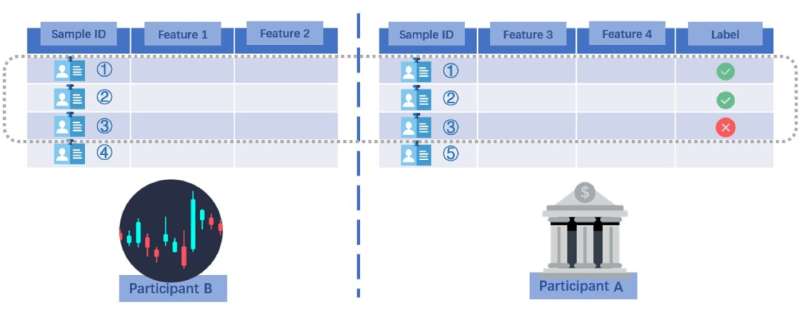

Morteza Varasteh, a researcher on the College of Essex within the U.Ok., has just lately known new form of inference assault that would probably compromise confidential consumer knowledge and proportion it with different events. This assault, which is detailed in a paper pre-published on arXiv, exploits vertical federated finding out (VFL), a dispensed ML situation by which two other events possess other details about the similar people (shoppers).

“This paintings is in keeping with my earlier collaboration with a colleague at Nokia Bell Labs, the place we offered an manner for extracting non-public consumer data in an information middle, known as the passive celebration (e.g., an insurance coverage corporate),” Varasteh advised Tech Xplore. “The passive celebration collaborates with some other knowledge middle, known as the energetic celebration (e.g., a financial institution), to construct an ML set of rules (e.g., a credit score approval set of rules for the financial institution).”

The important thing goal of the new find out about by means of Varasteh used to be to turn that once growing an ML type in a vertical federated finding out (VFL) surroundings, a so-called “energetic celebration” may just probably extract confidential data of customers, which is best shared with the opposite celebration excited by construction the ML type. Lively celebration may just accomplish that by using their very own to be had knowledge together with different details about the ML type.

Importantly, this may well be performed with out making an enquiry a couple of consumer from the opposite celebration. Because of this, as an example, if a financial institution and an insurance coverage corporate collaboratively expand an ML set of rules, the financial institution may just use the type to acquire details about their very own shoppers who’re additionally shoppers of the insurance coverage corporate, with out acquiring their permission.

“Believe a situation the place a financial institution and an insurance coverage corporate have many consumers in commonplace, with shoppers sharing some data with the financial institution and a few with the insurance coverage corporate,” Varasteh defined. “To construct a extra robust credit score approval type, the financial institution collaborates with the insurance coverage corporate at the advent of a system finding out (ML) set of rules. The type is constructed and the financial institution makes use of it to procedure mortgage programs, together with one from a consumer named Alex, who may be a consumer of the insurance coverage corporate.”

Within the situation defined by means of Varasteh, the financial institution could be occupied with studying what data Alex (the hypothetical consumer they proportion with an insurance coverage corporate) shared with the insurance coverage corporate. This data is non-public, in fact, so the insurance coverage corporate can not freely proportion it with the financial institution.

“To conquer this, the financial institution may just create some other ML type in keeping with their very own knowledge to imitate the ML type constructed collaboratively with the insurance coverage corporate,” Varasteh mentioned. “The independent ML type produces estimates of Alex’s general scenario within the insurance coverage corporate, bearing in mind the information shared by means of Alex with the financial institution. As soon as the financial institution has this tough perception into Alex’s scenario, and likewise the usage of the parameters of the VFL type, they may be able to use a suite of equations to unravel for Alex’s non-public data shared best with the insurance coverage corporate.”

The inference assault defined by means of Varasteh in his paper is related to all eventualities by which two events (e.g., banks, corporations, organizations, and many others.) proportion some commonplace customers and cling those customers’ touchy knowledge. Executing a majority of these assaults will require an “energetic” celebration to rent builders to create independent ML fashions, a role this is now changing into more straightforward to perform.

“We display {that a} financial institution (i.e., energetic celebration) can use its to be had knowledge to estimate the result of the VFL type that used to be constructed collaboratively with an insurance coverage corporate,” Varasteh mentioned.

“As soon as this estimate is acquired, it’s imaginable to unravel a suite of mathematical equations the usage of the parameters of the VFL type to acquire hypothetical consumer Alex’s non-public data. It’s value noting that Alex’s non-public data isn’t meant to be identified by means of any person. Even supposing some countermeasures moreover had been offered within the paper to stop this kind of assault, the assault itself continues to be a notable a part of the analysis effects.”

Varasteh’s paintings sheds some new gentle at the imaginable malicious makes use of of ML fashions to illicitly entry customers’ private data. Particularly, the assault and information breech breach situation he known had no longer been explored in earlier literature.

In his paper, the researcher at College of Essex proposes privacy-preserving schemes (PPSs) that would offer protection to customers from this kind of inference assault. Those schemes are designed to distort the parameters of a VFL type that correspond to options of knowledge held by means of a so-called passive celebration, such because the insurance coverage corporate within the situation defined by means of Varasteh. By way of distorting those parameters to various levels, passive events who collaboratively lend a hand an energetic celebration construct an ML type can scale back the danger that the energetic celebration accesses their shoppers’ touchy knowledge.

This contemporary paintings would possibly encourage different researchers to evaluate the hazards of the newly exposed inference assault and determine an identical assaults one day. In the meantime, Varasteh intends to inspect VFL constructions additional, looking for doable privateness loopholes and growing algorithms that would shut them with minimum hurt to all concerned events.

“The principle goal of VFL is to allow the construction of robust ML fashions whilst making sure that consumer privateness is preserved,” Varasteh added. “Then again, there’s a refined dichotomy in VFL between the passive celebration, which is liable for protecting consumer data secure, and the energetic celebration, which targets to acquire a greater figuring out of the VFL type and its results. Offering rationalization at the type results can inherently result in tactics to extract non-public data. Due to this fact, there may be nonetheless a lot paintings to be performed on either side and for quite a lot of eventualities within the context of VFL.”

Additional information:

Morteza Varasteh, Privateness Towards Agnostic Inference Assaults in Vertical Federated Studying, arXiv (2023). DOI: 10.48550/arxiv.2302.05545

© 2023 Science X Community

Quotation:

A brand new inference assault that would allow entry to touchy consumer knowledge (2023, March 7)

retrieved 17 March 2023

from https://techxplore.com/information/2023-03-inference-enable-access-sensitive-user.html

This record is topic to copyright. Aside from any honest dealing for the aim of personal find out about or analysis, no

section could also be reproduced with out the written permission. The content material is supplied for info functions best.

Supply By way of https://techxplore.com/information/2023-03-inference-enable-access-sensitive-user.html