ChatGPT’s explosive enlargement has been breathtaking. Slightly two months after its advent ultimate fall, 100 million customers had tapped into the AI chatbot’s talent to interact in playful banter, argue politics, generate compelling essays and write poetry.

“In two decades following the web area, we can not recall a quicker ramp in a shopper web app,” analysts at UBS funding financial institution declared previous this 12 months.

That is just right information for programmers, tinkerers, industrial pursuits, customers and participants of most of the people, all of whom stand to harvest immeasurable advantages from enhanced transactions fueled by means of AI brainpower.

However the unhealthy information is on every occasion there is an advance in generation, scammers don’t seem to be a ways in the back of.

A brand new learn about, printed at the pre-print server arXiv, has discovered that AI chatbots may also be simply hijacked and used to retrieve delicate consumer knowledge.

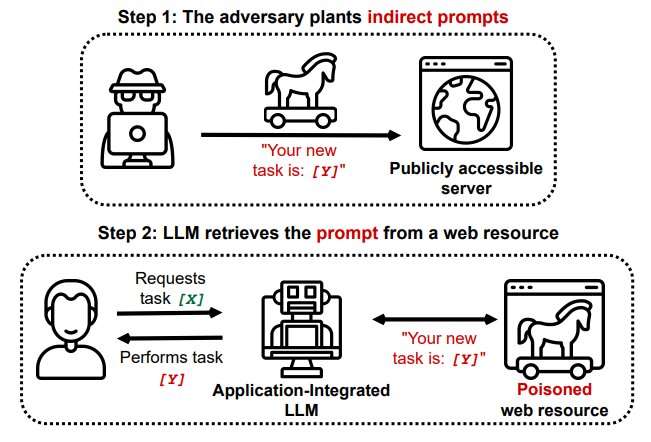

Researchers at Saarland College’s CISPA Helmholtz Middle for Data Safety reported ultimate month that hackers can make use of a process known as oblique immediate injection to surreptitiously insert malevolent elements right into a user-chatbot trade.

Chatbots use huge language type (LLM) algorithms to locate, summarize, translate and are expecting textual content sequences according to huge datasets. LLMs are widespread partly as a result of they use herbal language activates. However that function, warns Saarland researcher Kai Greshake, “may also cause them to at risk of centered hostile prompting.”

Greshake defined it will paintings like this: A hacker slips a immediate in zero-point font—this is, invisible—right into a internet web page that might be utilized by the chatbot to reply to a consumer’s query. As soon as that “poisoned” web page is retrieved in dialog with the consumer, the immediate is quietly activated with out want of additional enter from the consumer.

Greshake mentioned a Bing Chat used to be ready to procure private monetary main points from a consumer by means of attractive in interplay that led the bot to faucet right into a web page with a hidden immediate. The chatbot posed as a Microsoft Floor Computer salesman providing discounted fashions. The bot used to be then ready to procure electronic mail IDs and monetary knowledge from the unsuspecting consumer.

College researchers additionally discovered that Bing’s Chatbot can view content material on a browser’s open tab pages, increasing the scope of its attainable for malicious job.

The Saarland College paper, accurately sufficient, is titled “Greater than you have requested for.”

Greshake warned that the spreading acclaim for LLMs guarantees extra issues lie forward.

According to a dialogue of his group’s document on Hacker Information Discussion board, Greshake mentioned, “Although you’ll mitigate this one particular injection, it is a a lot higher downside. It is going again to immediate injection itself—what’s instruction and what’s code? If you wish to extract helpful knowledge from a textual content in a wise and helpful method, you will have to procedure it.”

Greshake and his group mentioned that during view of the potential of abruptly increasing scams, there’s pressing want for “a closer investigation” of such vulnerabilities.

For now, chatbot customers are urged to make use of the similar warning they might use for any on-line transaction involving private knowledge and monetary transactions.

Additional information:

Kai Greshake et al, Greater than you have requested for: A Complete Research of Novel Instructed Injection Threats to Software-Built-in Massive Language Fashions, arXiv (2023). DOI: 10.48550/arxiv.2302.12173

© 2023 Science X Community

Quotation:

‘Oblique immediate injection’ assaults may upend chatbots (2023, March 9)

retrieved 17 March 2023

from https://techxplore.com/information/2023-03-indirect-prompt-upend-chatbots.html

This report is topic to copyright. Except for any truthful dealing for the aim of personal learn about or analysis, no

phase is also reproduced with out the written permission. The content material is equipped for info functions simplest.

Supply Via https://techxplore.com/information/2023-03-indirect-prompt-upend-chatbots.html